Abstract

In this paper, we propose a novel local descriptor-based framework, called You Only Hypothesize Once (YOHO), for the registration of two unaligned point clouds. In contrast to most existing local descriptors which rely on a fragile local reference frame to gain rotation invariance, the proposed descriptor achieves the rotation invariance by recent technologies of group equivariant feature learning, which brings more robustness to point density and noise. Meanwhile, the descriptor in YOHO also has a rotation-equivariant part, which enables us to estimate the registration from just one correspondence hypothesis. Such property reduces the searching space for feasible transformations, thus greatly improving both the accuracy and the efficiency of YOHO. Extensive experiments show that YOHO achieves superior performances with much fewer needed RANSAC iterations on four widely-used datasets, the 3DMatch/3DLoMatch datasets, the ETH dataset and the WHU-TLS dataset.

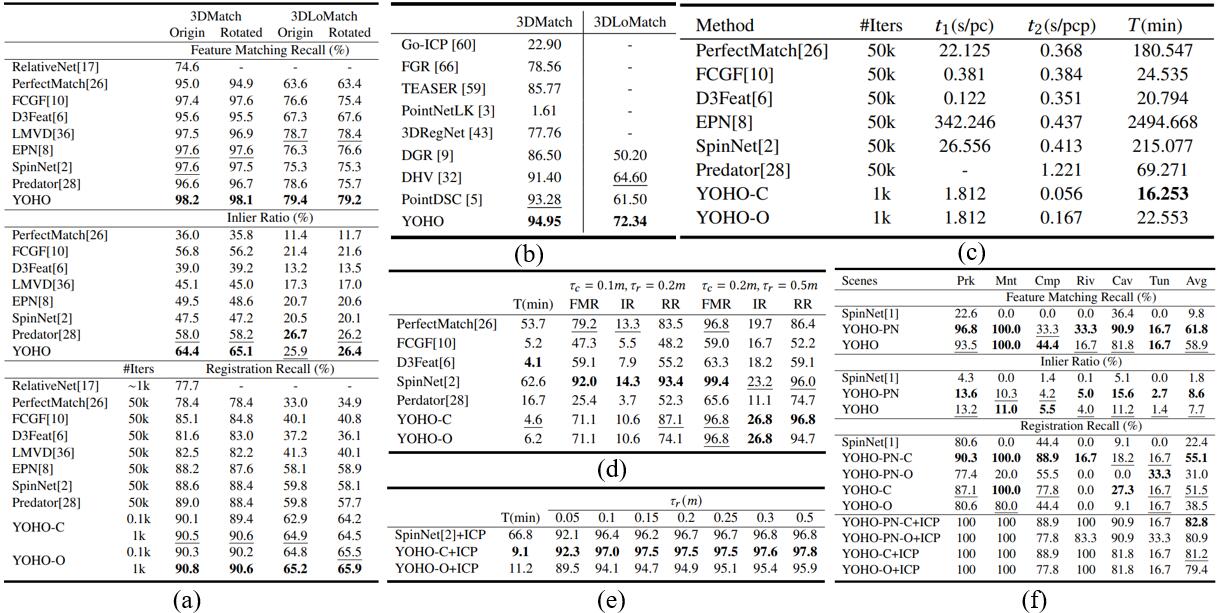

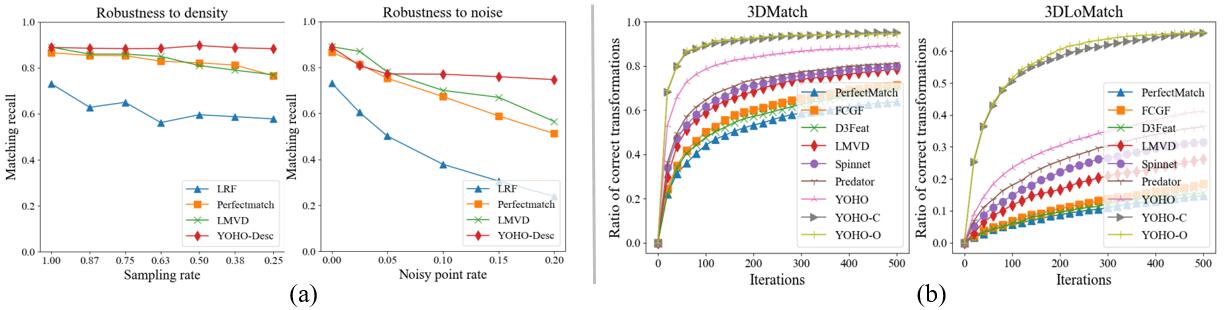

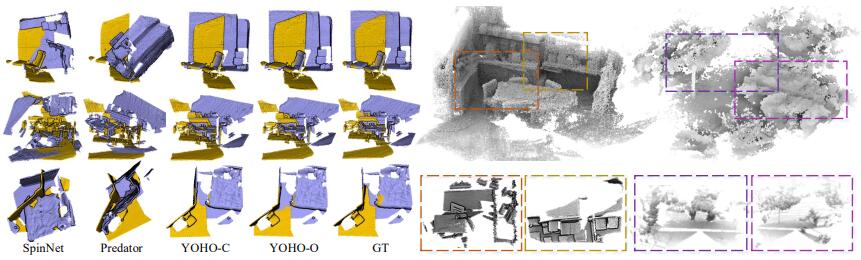

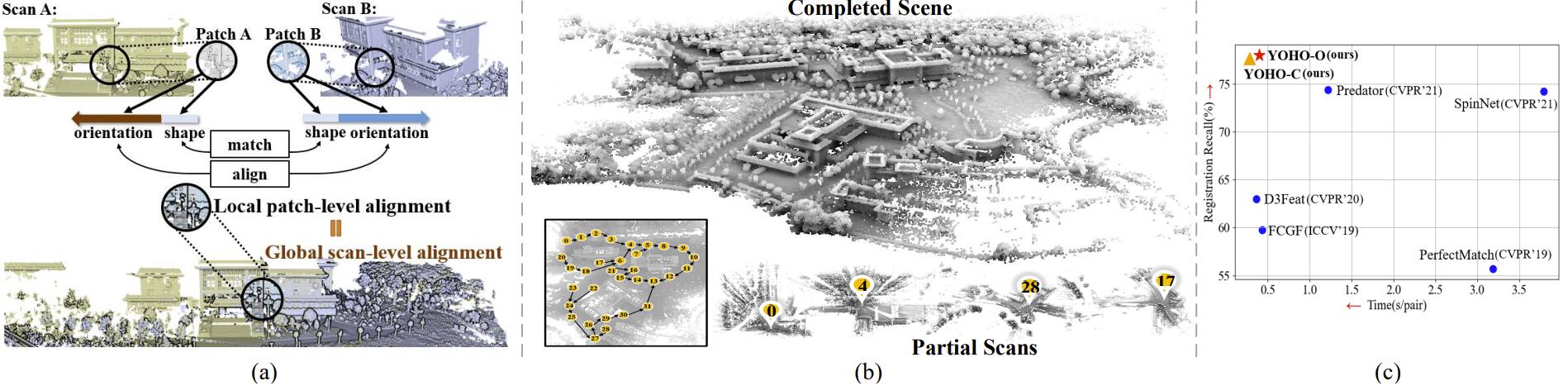

Fig.1. (a) The key idea of YOHO is to utilize orientations of local patches to find the global alignment of partial scans. (b) YOHO is able to automatically integrate partial scans into a completed scene, even these partial scans contain lots of noise and significant point density variations. (c) A figure of successful registration rate and average time cost to align a scan pair on the 3DMatch/3DLoMatch dataset. YOHO is more efficient and accurate than previous methods.