Abstract

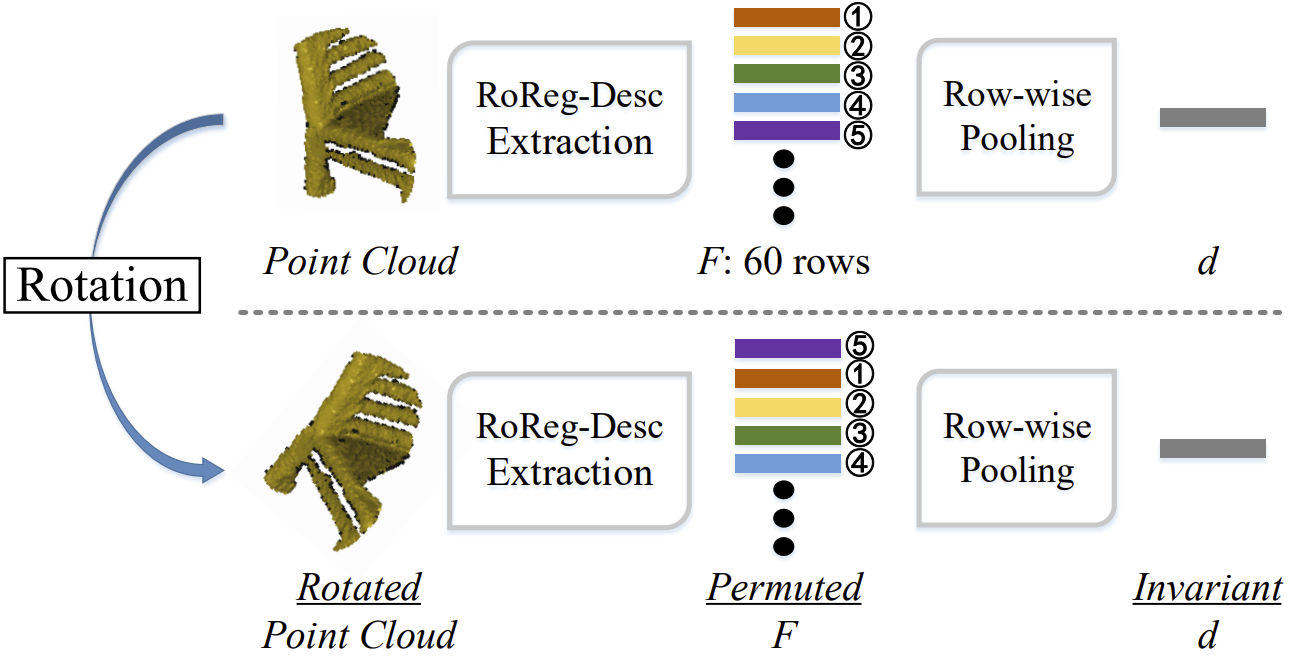

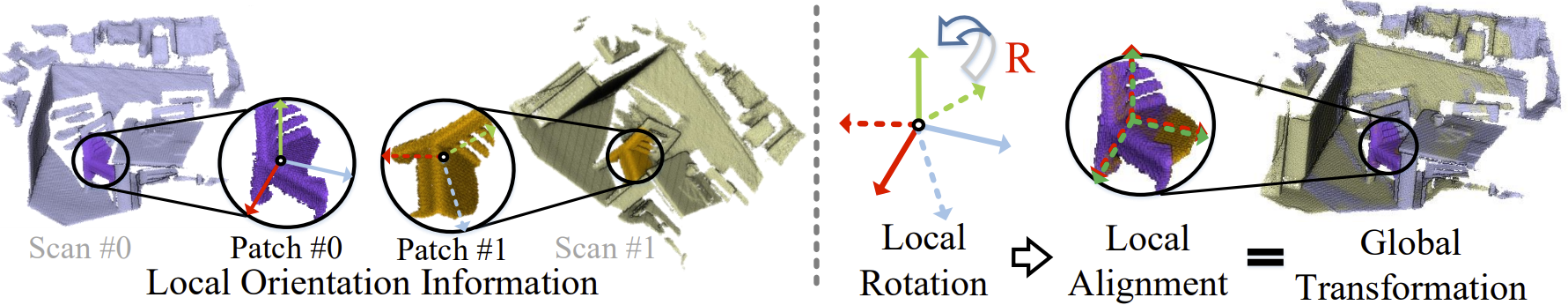

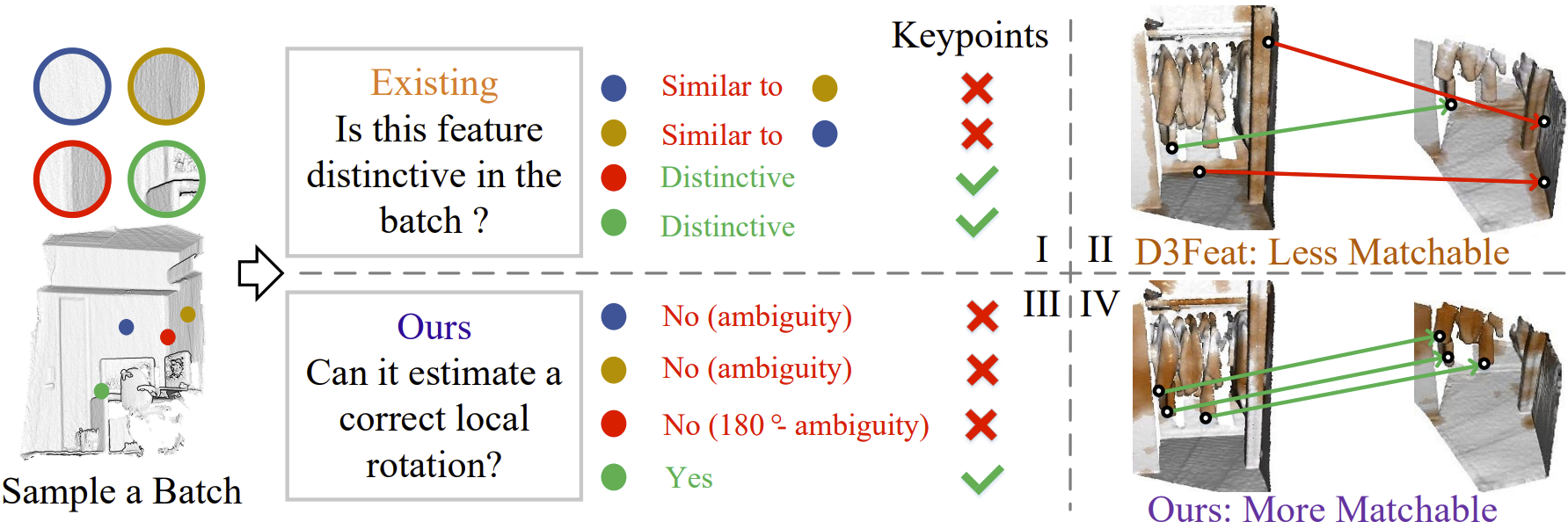

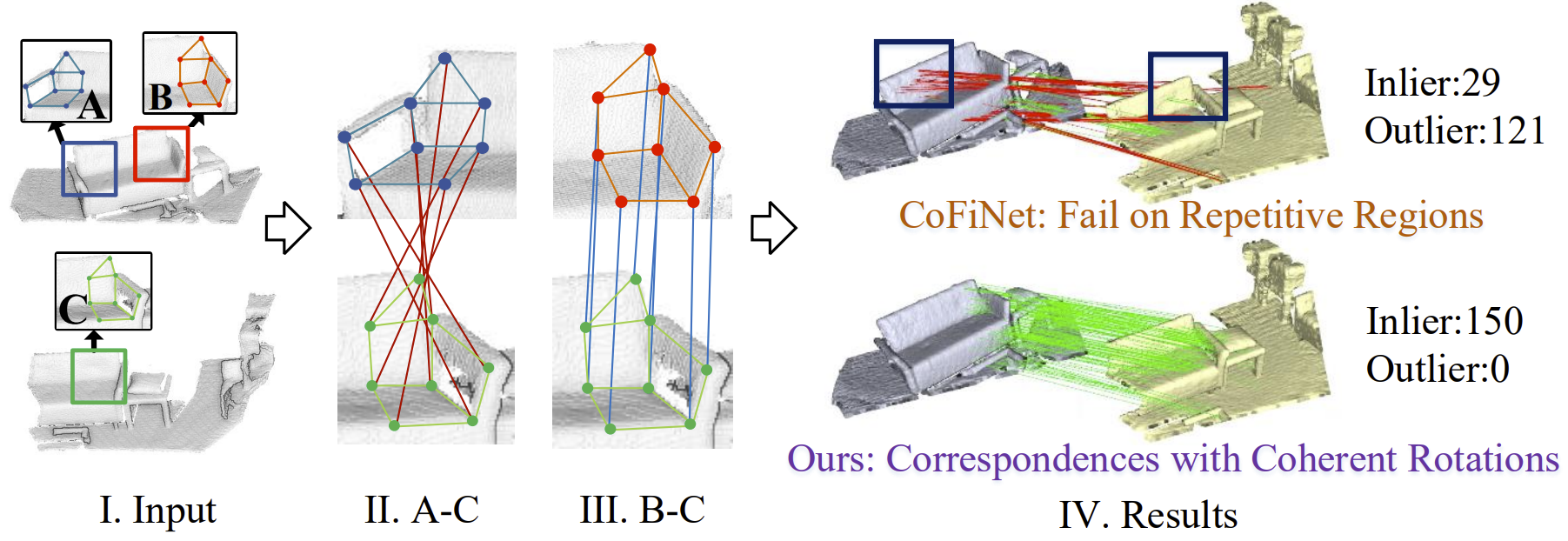

We present RoReg, a novel point cloud registration framework that fully exploits oriented descriptors and estimated local rotations in the whole registration pipeline. Previous methods mainly focus on extracting rotation-invariant descriptors for registration but unanimously neglect the orientations of descriptors. In this paper, we show that the oriented descriptors and the estimated local rotations are very useful in the whole registration pipeline, including feature description, feature detection, feature matching, and transformation estimation. Consequently, we design a novel oriented descriptor RoReg-Desc and apply RoReg-Desc to estimate the local rotations. Such estimated local rotations enable us to develop a rotation-guided detector, a rotation coherence matcher, and a one-shot-estimation RANSAC, all of which greatly improve the registration performance. Extensive experiments demonstrate that RoReg achieves state-of-the-art performance on the widely-used 3DMatch and 3DLoMatch datasets, and also generalizes well to the outdoor ETH dataset. In particular, we also provide in-depth analysis on each component of RoReg, validating the improvements brought by oriented descriptors and the estimated local rotations.

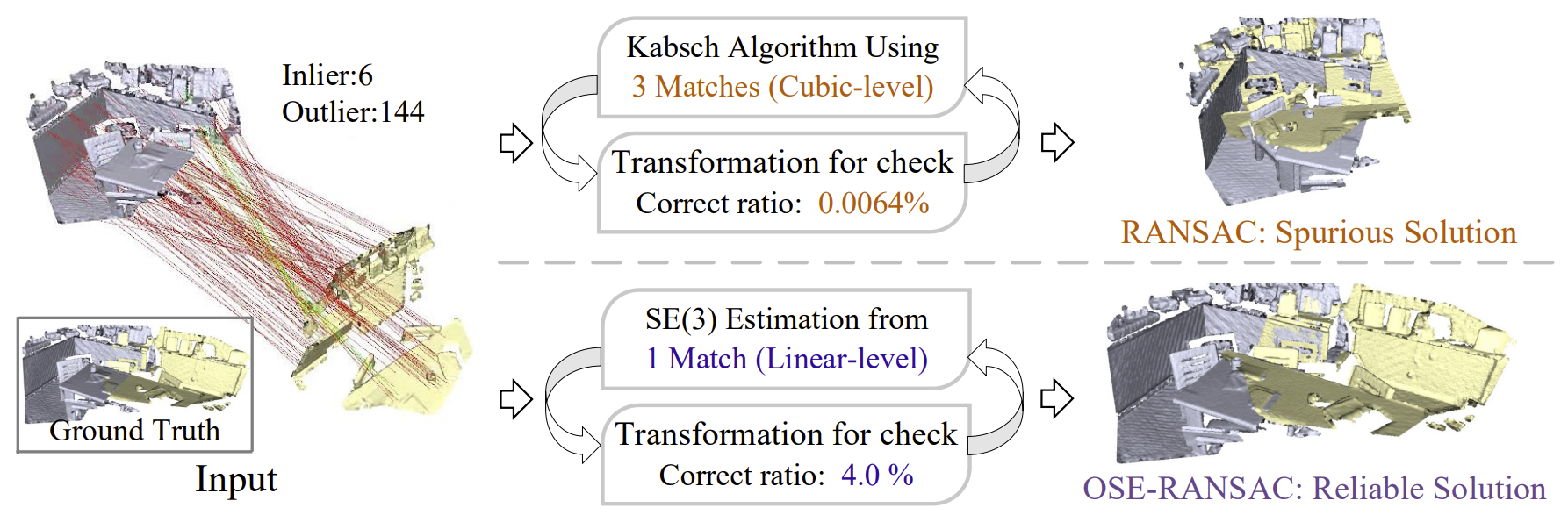

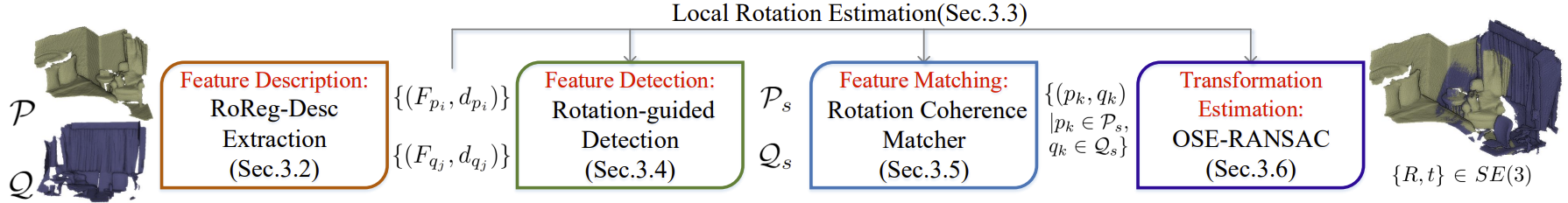

Fig.1 Pipeline of RoReg. We apply oriented descriptors (RoReg-Desc) and estimated local rotations in all four steps of the point cloud registration pipeline, i.e., feature description, feature detection, feature matching, and transformation estimation. Specifically, We first extract RoReg-Desc (Sec.3.2) and demonstrate how to estimate local rotations from RoReg-Descs (Sec.3.3). Then, a set of keypoints are selected utilizing the RoReg-Descs with the rotation-guided detector (Sec.3.4). The detected keypoints are matched to correspondences by the rotation coherence matcher (Sec.3.5). Finally, transformations are estimated by the OSE-RANSAC given the estimated correspondences (Sec.3.6).